The Case for Crypto AI: Decoding the Hype with the Synergy Matrix

Reflects the AI-Blockchain Synergy Matrix’s role as a guide for identifying impactful areas.

The rapid advancement of AI has created an unprecedented concentration of computational power, data, and algorithmic capabilities within a handful of large technology companies. As AI systems become increasingly integral to our society, questions about accessibility, transparency, and control have moved to the forefront of technical and policy discussions. Against this backdrop, the intersection of Blockchain and AI presents an intriguing alternative path – one that could potentially reshape how AI systems are developed, deployed, scaled and governed.

Rather than advocating for a complete disruption of existing AI infrastructure, we explore specific use cases where decentralized approaches might offer unique advantages while acknowledging scenarios where traditional centralized systems remain more practical.

Several key questions guide our analysis:

How do the fundamental properties of decentralized systems complement or conflict with the requirements of modern AI systems?

Where along the AI development stack – from data collection to model training to inference – can blockchain technologies provide meaningful improvements?

What technical and economic trade-offs emerge when decentralizing different aspects of AI systems?

Current Constraints in AI Stack:

Epoch AI has done amazing job putting together detailed breakdown of current constraints in AI Stack. This research from Epoch AI highlights the projected constraints on scaling AI training compute by 2030. The chart evaluates different bottlenecks that could limit the expansion of AI training compute, using Floating Point Operations per Second (FLoPs) as the key metric.

The scaling of AI training compute is likely to be limited by a combination of power availability, chip manufacturing capabilities, data scarcity, and latency issues. Each of these factors imposes a different ceiling on achievable compute, with the latency wall presenting the highest theoretical limit.

This chart emphasizes the need for advancements in hardware, energy efficiency, unlocking data trapped on edge devices, and networking to support future AI growth.

Power Constraints (Performance):

Feasibility of Scaling Power Infrastructure by 2030: Projections indicate that data center campuses with capacities between 1 to 5 gigawatts (GW) are likely achievable by 2030. However, this growth is contingent upon substantial investments in power infrastructure and overcoming potential logistical and regulatory hurdles.

Limited by energy availability and power infrastructure, allowing growth up to 10,000x current compute levels.

Chip Production Capacity (Verifiability):

The production of chips capable of supporting these advanced computations (e.g., NVIDIA H100, Google TPU v5) is currently limited due to packaging constraints (e.g., TSMC CoWoS). This directly impacts the availability and scalability of verifiable computations.

Bottlenecked by manufacturing and supply chains, enabling a 50,000x increase in compute power.

Advanced chips are essential for enabling secure enclaves or Trusted Execution Environments (TEEs) on edge devices, which verify computations and protect sensitive data.

Data Scarcity (Privacy):

Data Scarcity and AI Training: The disparity between the indexed web and the whole web highlights the accessibility challenges for AI training. Much of the potential data is either private or not indexed, limiting its utility.

Need for Multimodal AI: Large stocks of image and video data suggest the growing importance of multimodal AI systems capable of processing data beyond text.

Future Data Challenges: This is AI's next frontier, figuring out how to tap into high-quality private data while giving data owners control and fair value.

Latency Wall (Performance):

Inherent Latency Constraints in Model Training: As AI models grow in size, the time required for a single forward and backward pass increases due to the sequential nature of computations. This introduces a fundamental latency that cannot be bypassed, limiting the speed at which models can be trained.

Challenges in Scaling Batch Sizes: To mitigate latency, one approach is to increase the batch size, allowing more data to be processed in parallel. However, there are practical limits to batch size scaling, such as memory constraints and diminishing returns in model convergence. These limitations make it challenging to offset the latency introduced by larger models.

Foundation:

Decentralized AI Triangle

AI constraints such as data scarcity, compute limitations, latency and production capacity converge into the Decentralized AI Triangle, which balances Privacy, Verifiability, and Performance. These properties are fundamental to ensuring the effectiveness, trust, and scalability of decentralized AI.

This table explores the key trade-offs between all three properties, providing insights into their descriptions, enabling techniques, and associated challenges:

Privacy: Focuses on protecting sensitive data during training and inference processes. Key techniques include TEEs, MPC, Federated Learning, FHE, and Differential Privacy. Trade-offs arise with performance overhead, transparency challenges affecting verifiability, and scalability limitations.

Verifiability: Ensures the correctness and integrity of computations using ZKPs, cryptographic credentials, and verifiable computation. However, balancing privacy and performance with verifiability introduces resource demands and computational delays.

Performance: Refers to executing AI computations efficiently and at scale, leveraging distributed compute infrastructure, hardware acceleration, and efficient networking. Trade-offs include slower computations due to privacy-enhancing techniques and overhead from verifiable computations.

Blockchain Trilemma:

The Blockchain Trilemma captures the core trade-offs every blockchain must face:

Decentralization: Keeping the network distributed across many independent nodes, preventing any single entity from controlling the system

Security: Ensuring the network remains safe from attacks and maintains data integrity, which typically requires more validation and consensus overhead

Scalability: Handling high transaction volumes quickly and cheaply - but this usually means sacrificing either decentralization (fewer nodes) or security (less thorough validation)

For instance, Ethereum prioritizes decentralization and security, hence slower speeds. For further understanding on trade offs in Blockchain architecture, refer this.

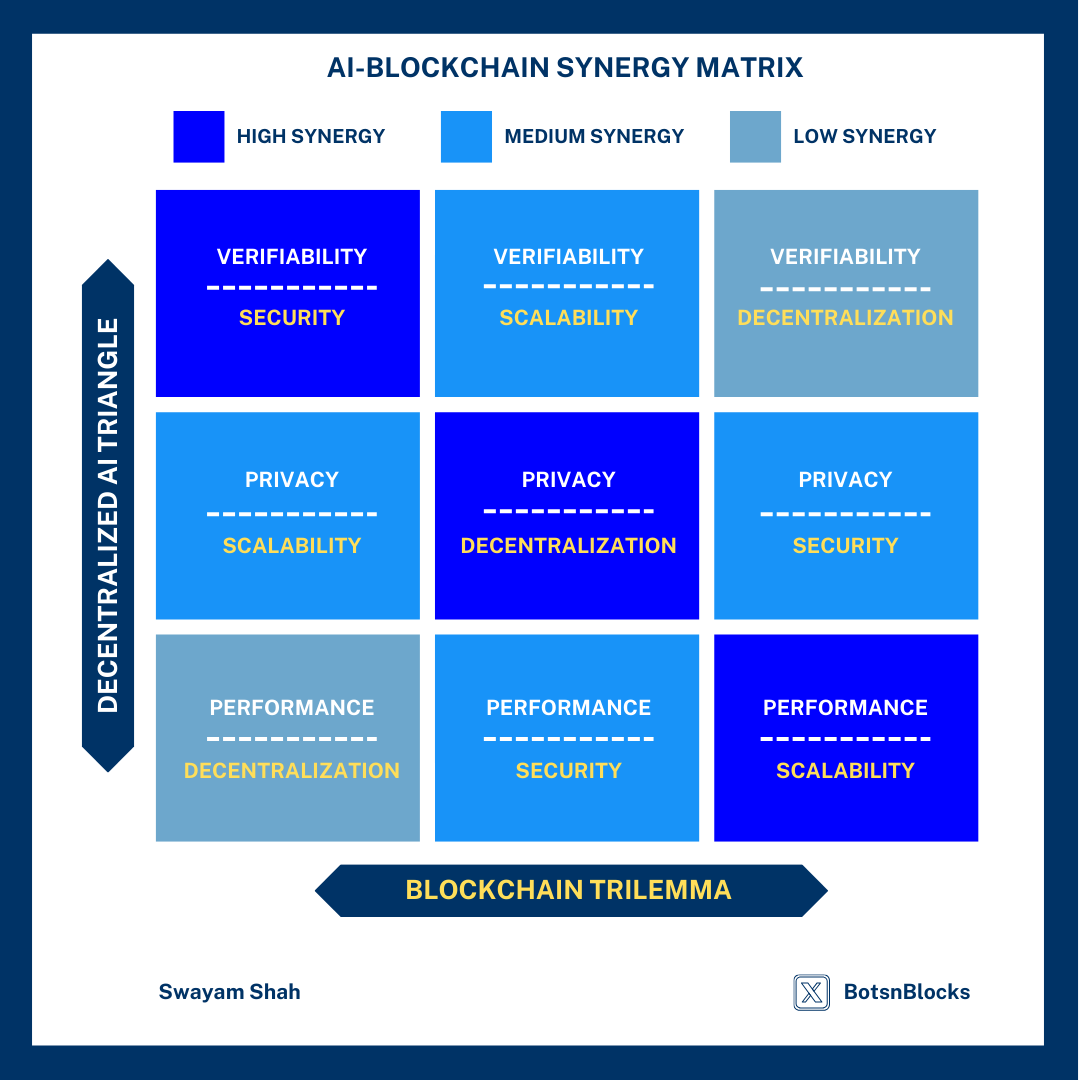

AI-Blockchain Synergy Analysis Matrix (3x3)

The intersection of AI and blockchain is a complex dance of trade-offs and opportunities. This matrix maps out where these two technologies create friction, find harmony, and occasionally amplify each other's weaknesses.

How the Synergy Matrix Works

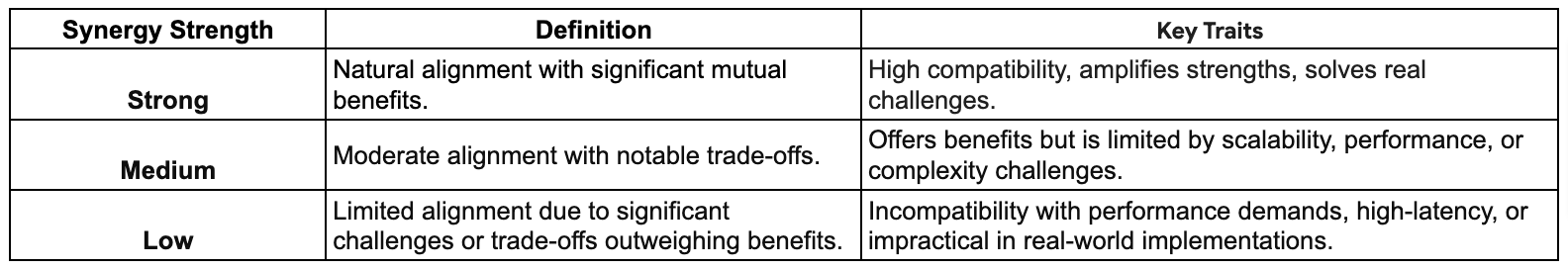

The synergy strength reflects the level of compatibility and impact between blockchain and AI properties in specific categories. It is determined by how well the two technologies address mutual challenges and enhance each other’s functionality.

Example 1: Performance + Decentralization (Weak Synergy) —In decentralized networks, such as Bitcoin or Ethereum, performance is inherently constrained by factors like resource variability, high communication latency, transaction costs and consensus mechanisms. For AI applications requiring low-latency, high-throughput processing—like real-time AI inference or large-scale model training—these networks struggle to provide the speed and computational reliability necessary for optimal performance.

Example 2: Privacy + Decentralization (Strong Synergy)—Privacy-preserving AI techniques, such as Federated Learning, benefit from blockchain’s decentralized infrastructure to protect user data while enabling collaboration. SoraChain AI exemplifies this by enabling federated learning where data ownership is preserved, empowering data owners to contribute their quality data for training while retaining privacy.

This matrix aims to empower the industry to navigate the confluence of blockchain and AI with clarity, helping innovators and investors prioritize what works, explore what’s promising, and avoid what’s merely speculative.

Along one axis, we have the fundamental properties of decentralized AI systems: verifiability, privacy, and performance. On the other, we face blockchain's eternal trilemma: security, scalability, and decentralization. When these forces collide, they create a spectrum of synergies – from powerful alignments to challenging mismatches.

For instance, when verifiability meets security (high synergy), we get robust systems for proving AI computations. But when performance demands clash with decentralization (low synergy), we face the hard reality of distributed systems' overhead. Some combinations, like privacy and scalability, land in the middle – promising but complicated.

Why This Matters?

A Strategic Compass: Not every AI or blockchain project delivers tangible value. The matrix points decision-makers, researchers, and developers toward high-synergy categories that address real-world challenges, such as ensuring data privacy in federated learning or using decentralized compute for scalable AI training.

Focusing on Impactful Innovation and Resource Allocation: By understanding where the strongest synergies lie (e.g., Security + Verifiability, Privacy + Decentralization), this tool allows stakeholders to concentrate their efforts and investments on areas that promise measurable impact, avoiding energy spent on weak or impractical integrations.

Guiding the Ecosystem’s Evolution: As both AI and blockchain evolve, the matrix can serve as a dynamic guide to evaluate emerging projects, ensuring they align with meaningful use cases rather than contributing to overhyped narratives.

This table summarizes these combinations by their synergy strength—from strong to weak—and explains how these intersections work in decentralized AI systems. Examples of innovative projects are provided to illustrate real-world applications in each category. The table serves as a practical guide to understanding where blockchain and AI technologies meaningfully intersect, helping to identify impactful areas while avoiding overhyped or less feasible combinations.

Conclusion

The intersection of blockchain and AI presents transformative potential, but the path forward requires clarity and focus. Projects that truly innovate—such as those in Federated Learning (Privacy + Decentralization), Distributed Compute/Training (Performance + Scalability), and zkML (Verifiability + Security)—are shaping the future of decentralized intelligence by addressing critical challenges like data privacy, scalability, and trust.

However, it’s equally important to approach the space with a discerning eye. Many so-called AI agents are merely wrappers around existing models, offering minimal utility and limited integration with blockchain. The real breakthroughs will come from projects that harness the strengths of both domains to solve real-world problems, rather than riding the wave of hype.

As we move forward, the AI-Blockchain Synergy Matrix becomes a powerful lens for evaluating projects, distinguishing impactful innovations from noise.

Looking ahead, the next decade will belong to projects that combine blockchain’s resilience with AI’s transformative potential to solve real challenges like energy-efficient model training, privacy-preserving collaborations, and scalable AI governance. The industry must embrace these focal points to unlock the future of decentralized intelligence.

Stay Updated on Crypto AI

For the daily news, insights, and updates at the intersection of Crypto and AI, join our Telegram community: 👉 Crypto AI Pulse

Follow on X: BotsnBlocks